Publications

With the relentless growth of technology usage, particularly among younger generations, the alarming prevalence of hate speech on the internet has become an urgent global concern. This research paper addresses this critical need by presenting an extensive investigation encompassing three distinct hate speech detection tasks across a diverse linguistic landscape. The first task involves hate and offensive speech classification in Gujarati and Sinhala, assessing sentence-level hatefulness. The second task extends to fine-grained BIO tagging, enabling precise identification of hate speech within sentences. Finally, the third task expands the scope to hate speech classification in Bengali, Bodo, and Assamese using social media data, categorizing content as hateful or not. Employing state-of-the-art deep learning techniques tailored to each language’s characteristics, this research contributes significantly to the development of robust and culturally sensitive hate speech detection systems, imperative for nurturing safer online spaces and fostering cross-cultural understanding.

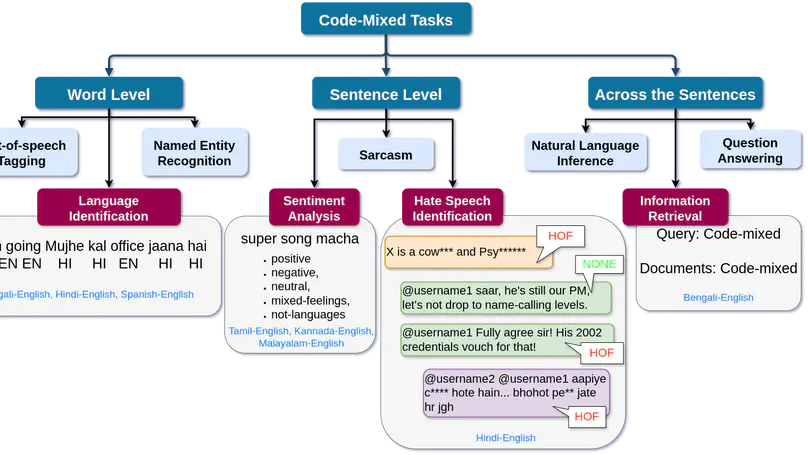

This study focuses on the task of word-level language identification in code-mixed Tulu-English texts, which is crucial for addressing the linguistic diversity observed on social media platforms. The CoLITunglish shared task served as a platform for multiple teams to tackle this challenge, aiming to enhance our understanding of and capabilities in handling code-mixed language data. To tackle this task, we employed a methodology that leveraged Multilingual BERT (mBERT) for word embedding and a Bi-LSTM model for sequence representation. Our system achieved a Precision score of 0.74, indicating accurate language label predictions. However, our Recall score of 0.571 suggests the need for improvement, particularly in capturing all language labels, especially in multilingual contexts. The resulting F1 score, a balanced measure of our system’s performance, stood at 0.602, indicating a reasonable overall performance. Ultimately, our work contributes to advancing language understanding in multilingual digital communication.

Stopwords often present themselves littered throughout the documents, their presence in sentences has the least significant semantic impact and these terms represent an impressive collection of archives without any semantic value. Thus, stopwords should be eliminated from any document for improved language description. In this paper, we have explored and evaluated the effect of stopwords on the performance of information retrieval in code-mixed social media data in Indian languages such as Bengali–English. A considerable amount of research has been performed in the areas of sentiment analysis, language identification, and language generation for code-mixed languages. However, no such work has been done in the field of removal of stopwords from a code-mixed document. That is the motivation behind this work. In this work, we have devoted our attention to comparing the impact of corpus-based stopword removal over non-corpus-based stopword removal on Information retrieval for code-mixed data. How to find the best stopword list for each constituent language of a code mixed language? It was observed that corpus-based stopword removal generally improved Mean Average Precision (MAP) values significantly compared to non-corpus-based stopword removal by 16%. For both languages, different threshold values were tuned together based on the TF-IDF score, and it gave the optimal list for stopwords.

We are seeing an increase in hateful and offensive tweets and comments on social media platforms like Facebook and Twitter, impacting our social lives. Because of this, there is an increasing need to identify online postings that can violate accepted norms. For resource-rich languages like English, the challenge of identifying hateful and offensive posts has been well investigated. However, it remains unexplored for languages with limited resources like Marathi. Code-mixing frequently occurs in the social media sphere. Therefore identification of conversational hate and offensive posts and comments in Code-Mixed languages is also challenging and unexplored. In three different objectives of the HASOC 2022 shared task, we proposed approaches for recognizing offensive language on Twitter in Marathi and two code-mixed languages (i.e., Hinglish and German). Some tasks can be expressed as binary classification (also known as coarse-grained, which entails categorizing hate and offensive tweets as either present or absent). At the same time, others can be expressed as multi-class classification (also known as fine-grained, where we must further categorize hate and offensive tweets as Standalone Hate or Contextual Hate). We concatenate the parent-comment-reply data set to create a dataset with additional context. We use the multilingual bidirectional encoder representations of the transformer (mBERT), which has been pre-trained to acquire the contextual representations of tweets. We have carried out several trials using various pre-processing methods and pre-trained models. Finally, the highest-scoring models were used for our submissions in the competition, which ranked our team (irlab@iitbhu) second out of 14, seventh out of 11, sixth out of 10, fourth out of 7, and fifth out of six for the ICHCL task 1, ICHCL task 2, Marathi subtask 3A, subtask 3B and subtask 3C respectively.

Social media platforms have seen a significant rise in user engagement in recent years. More and more people are expressing their views and ideas on social platforms. There is an ardent need to develop an accurate system to classify text based on sentiments. In this paper, our team IRLab@ IITBHU presents a solution architecture submitted to the shared task “Sentiment Analysis and Homophobia Detection of YouTube Comments in Code-Mixed Dravidian Languages" organized by DravidianCodeMix 2022 at Forum for Information Retrieval Evaluation (FIRE) 2022. to reveal how sentiment is expressed in code-mixed scenarios. For task A, we used mBERT model and word-level language tag to classify YouTube comments into positive, negative, neutral, or mixed emotions. And for Task B, we performed basic preprocessing steps and built mBERT model to identify homophobia, transphobia, and non-anti-LGBT+ content from the given corpus. For Task A, our proposed system achieved the best result, securing the first rank for Malayalam-English and Kannada-English code-mixed datasets with the 𝐹1 score of 0.72 and 0.66 respectively.

This paper describes the IRlab@IITBHU system for the Dravidian-CodeMix - FIRE 2021 Sentiment Analysis for Dravidian Languages pairs Tamil-English (TA-EN), Kannada-English (KN-EN), and MalayalamEnglish (ML-EN) in Code-Mixed text. We have reported three models output in this paper where We have submitted only one model for sentiment analysis of all code-mixed datasets. Run-1 was obtained from the FastText embedding with multi-head attention, Run-2 used the meta embedding techniques, and Run-3 used the Multilingual BERT(mBERT) model for producing the results. Run-2 outperformed Run-1 and Run-3 for all the language pairs.

Hate Speech and Offensive Content Identification is one of the most challenging problem in the natural language processing field, being imposed by the rising presence of this phenomenon in online social media. This paper describes our Transformer-based solutions for identifying offensive language on Twitter in three languages (ie, English, Hindi, and Marathi) and one code mixed (English-Hindi) language, which was employed in Subtask 1A, Subtask 1B and Subtask 2 of the HASOC 2021 shared task. Finally, the highest-scoring models were used for our submissions in the competition, which ranked our IRLab@ IITBHU team 16th of 56, 18th of 37, 13th of 34, 7th of 24, 12th of 25 and 6th of 16 for English Subtask 1A, English Subtask 1B, Hindi Subtask 1A, Hindi Subtask 1B, Marathi Subtask 1A, and English-Hindi Code-Mix Subtask 2 respectively.

This paper describes the IRlab@IIT-BHU system for the OffensEval 2020. We take the SVM with TF-IDF features to identify and categorize hate speech and offensive language in social media for two languages. In subtask A, we used a linear SVM classifier to detect abusive content in tweets, achieving a macro F1 score of 0.779 and 0.718 for Arabic and Greek, respectively.

This paper reports our submission to the shared Task 2 Identification of informative COVID-19 English tweets at W-NUT 2020. We attempted a few techniques, and we briefly explain here two models that showed promising results in tweet classification tasks DistilBERT and FastText. DistilBERT achieves a F1 score of 0.7508 on the test set, which is the best of our submissions.

This paper describes the IRlab@IITBHU system for the Dravidian-CodeMix - FIRE 2020 Sentiment Analysis for Dravidian Languages pairs Tamil-English (TA-EN) and Malayalam-English (ML-EN) in Code-Mixed text. We submitted three models for sentiment analysis of code-mixed TA-EN and MA-EN datasets. Run-1 was obtained from the BERT and Logistic regression classifier, Run-2 used the DistilBERT and Logistic regression classifier, and Run-3 used the fastText model for producing the results. Run-3 outperformed Run-1 and Run-2 for both the datasets. We obtained an F1-score of 0.58, rank 8/14 in TA-EN language pair and for ML-EN, an F1-score of 0.63 with rank 11/15.